5 Takeaways From the Facebook Papers

This is about as simple as I could make it.

On October 25, a consortium of journalists from 17 different reputable news outlets unleashed a flurry of more than 50 articles based on revelations they gleaned from tens of thousands of files that Facebook whistleblower Frances Haugen smuggled out of the social media giant when she left in the spring. But that was just one event in a series of them that dates back to late last year.

If you want a clear explanation of how all of this happened, read this article from New York Times media reporter (and former editor-in-chief of BuzzFeed) Ben Smith. But here’s the gist, summarized by me, based on everything I’ve read:

December 2020: Frances Haugen meets Jeff Horwitz from the Wall Street Journal and after vetting/auditioning him, decides that he’s the right guy to leak the tens of thousands documents she is getting from Facebook. She appreciates his fairness and lack of interest in sensationalism.

Spring 2021: Haugen leaves Facebook.

Mid-September 2021: Horwitz and the WSJ publish 11 articles based on revelations they glean from the tens of thousands of documents that Haugen provided them.

October 3, 2021: Haugen appears on 60 Minutes to give an interview expounding on the WSJ reporting.

October 5, 2021: Haugen testifies before Senate Commerce Subcommittee on Consumer Protection explaining her perspective on the leaked papers and claiming that she believes Facebook has violated securities law by not informing investors of their research findings.

October 7, 2021: Haugen and her team of lawyers/PR people organize a Zoom call with roughly 17 media outlets. She wants to provide them with the same papers she gave Horwitz and the WSJ in hopes that they can pull other information and cover angles that the WSJ missed, in her view.

Mid-October 2021: The group of journalists are in a Slack group together coordinating their content (to some degree) and feeling sorta awkward about it. They have agreed to wait to publish their stories until Monday, October 25.

Friday October 22, 2021: Like sprinters at the starting line, a couple of reporters jump the gun and publish their stories before the agreed-upon date, claiming theirs were written based on information they received apart from Haugen, and therefore not required to wait until Monday the 25th.

Monday, October 25, 2021: Reporters at at least 17 different news outlets publish over 50 stories on what are now called “The Facebook Papers.” (You can find a list of all of the articles here.)

So here we are. What do we learn from all this reporting on all of these papers? (The papers themselves aren’t available to the public.) Likewise, why should Christians care? Let me try to share all of what I have read so far—I have only read about half of the 50 articles—into five basic takeaways.

Consider this my distillation of everything I’ve learned. We won’t do a lot of deep-dive application here. That will hopefully be coming in the next few weeks1, but what follows is my best summary of what you should know from these papers. Grab some coffee, and let’s get to it.

1) Facebook has routinely made choices that prioritize profits over people.

You and I are not Facebook customers. We are Facebook users. Facebook customers are the advertisers that spend billions of dollars per quarter to get their content into our feeds. We, the users, are the ones that provide the content that fuels Facebook as a platform, so that they can make money from their customers, the advertisers.

I have written at length both in this newsletter and in my forthcoming book: Facebook routinely makes decisions and “mistakes” that just happen to serve its customers (advertisers) to the detriment of their users (you and me).

Last quarter (July-Sept), Facebook generated $29 billion in revenue. They have projected they will make about $31 billion in revenue the upcoming quarter, as the holiday season tends to generate more ad spend from businesses trying to get in front of users purchasing Christmas gifts.

The Facebook Papers revealed what people like me, who observe and write on Facebook, have long suspected but have never quite been able to prove: Facebook knows it has serious problems, but it willfully ignores those problems because addressing those problems would hurt their massive profits. Ellen Cushing of The Atlantic wrote, “Reading these documents is a little like going to the eye doctor and seeing the world suddenly sharpen into focus.”

In fact, the first real evidence of this came over a year ago in May 2020 when—you guessed it—Jeff Horwitz reported on a study leaked from Facebook’s own internal researchers that shows it creates divisiveness and divisiveness is some of its best content:

“Our algorithms exploit the human brain’s attraction to divisiveness,” read a slide from a 2018 presentation. “If left unchecked,” it warned, Facebook would feed users “more and more divisive content in an effort to gain user attention & increase time on the platform.”

That presentation went to the heart of a question dogging Facebook almost since its founding: Does its platform aggravate polarization and tribal behavior?

The answer it found, in some cases, was yes.

Facebook had kicked off an internal effort to understand how its platform shaped user behavior and how the company might address potential harms. Chief Executive Mark Zuckerberg had in public and private expressed concern about “sensationalism and polarization.”

But in the end, Facebook’s interest was fleeting. Mr. Zuckerberg and other senior executives largely shelved the basic research, according to previously unreported internal documents and people familiar with the effort, and weakened or blocked efforts to apply its conclusions to Facebook products.

This was more than a year before the Facebook Papers were published…perhaps a bit of foreshadowing of what has just transpired.

A 2018 presentation from internal Facebook researchers, as revealed by the WSJ, showed that divisiveness and polarization increased the time people spent on Facebook, which in turn generates more money for Facebook. This presentation was given suggesting this is a problem. But it was a problem of Facebook’s own making. The Facebook Papers show (and we actually kind of knew this before we got the Facebook papers) that Facebook’s attempts to float “meaningful social interactions” or “MSIs” to the top of our feeds are what caused that problem. The Washington Post reports:

Once again, Facebook found its answer in the algorithm: It developed a new set of goal metrics that it called “meaningful social interactions,” designed to show users more posts from friends and family, and fewer from big publishers and brands. In particular, the algorithm began to give outsize weight to posts that sparked lots of comments and replies.

The downside of this approach was that the posts that sparked the most comments tended to be the ones that made people angry or offended them, the documents show. Facebook became an angrier, more polarizing place. It didn’t help that, starting in 2017, the algorithm had assigned reaction emoji — including the angry emoji — five times the weight of a simple “like,” according to company documents.

So, in 20172, Facebook ostensibly made the decision to suppress brand content and promote friends/family content to make the experience better for its users (you and me). It just so happens that such a decision also makes brands spend a lot more money to get their content in front of people, and it led to the problems cited in the presentation leaded by the WSJ in 2020.

This algorithm change—to demote organic posts by brands and promote content with the most discussion around it—is perhaps the keystone piece of evidence that Facebook chooses profits over people. It suppressed content from brands and floated the most discussed content to the top. This put arguments and fights at the top of everyone’s feeds and forced brands to spend a lot more money to even have their content be seen on the platform.

CBS News provides an even deeper look into this problem, writing:

In one internal memo from November 2019, a Facebook researcher noted that "Angry," "Haha," and "Wow" reactions are heavily tied to toxic and divisive content.

"We consistently find that shares, angrys, and hahas are much more frequent on civic low-quality news, civic misinfo, civic toxicity, health misinfo, and health antivax content," the Facebook researcher wrote.

In April 2019, political parties in Europe complained to Facebook that the News Feed change was forcing them to post provocative content and take up extreme policy positions.

One political party in Poland told Facebook that the platform's algorithm changes forced its social media team to shift from half positive posts and half negative posts to 80% negative and 20% positive.

That bit on the political parties having to change their policy stances to fit the Facebook algorithm is nuts.

Facebook employees themselves are in conflict about the true purpose and nature of the company. The Associated Press reported following the Facebook Papers last week:

There is a deep-seated conflict between profit and people within Facebook — and the company does not appear to be ready to give up on its narrative that it’s good for the world even as it regularly makes decisions intended to maximize growth.

“Facebook did regular surveys of its employees — what percentage of employees believe that Facebook is making the world a better place,” [Sophie] Zhang [former Facebook data scientist] recalled.

“It was around 70 percent when I joined. It was around 50 percent when I left,” said Zhang, who was at the company for more than two years before she was fired in the fall of 2020.

It was Haugen herself who first used the phrase, “Facebook chooses profits over people,” when she went on 60 Minutes and before Congress last month.

I don’t know how many other ways I need to show it or prove it: Facebook makes more money when it makes its users more miserable. I’ll say more about this at the bottom of the newsletter today, but I think this is the most compelling reason why Christians should care.

2) Facebook is full of great researchers doing important work, but they’re stymied at the top.

Kevin Roose, tech reporter from the New York Times has said in different times and ways about Facebook and its disgruntled employees, “If you hire a bunch of young people and tell them they’re there to change the world, you better be careful or they’re liable to believe you.”3 It’s true, isn’t it? This is why Facebook ends up with people like Frances Haugen leaking documents and calling the company into public accountability. People like her join Facebook to change the world for the better, and when their attempts to do so are blocked by the top executives, they’re going to leave and tell people why.

Another revelation from the Facebook Papers is that Facebook has a bunch of researchers who are fully aware of the problems wrought by the company. Those researchers have presented data over the years—whether about Instagram harming teen girls, misinformation running rampant on the platform, divisiveness leading to more profit for Facebook, or other subjects—and Facebook has taken enough action to defend itself but not enough action to fix the problems.

Just consider these two excerpts from Horwitz’s piece from early October (bolding mine):

“Thirty-two percent of teen girls said that when they felt bad about their bodies, Instagram made them feel worse,” the researchers said in a March 2020 slide presentation posted to Facebook’s internal message board, reviewed by The Wall Street Journal. “Comparisons on Instagram can change how young women view and describe themselves.”

For the past three years, Facebook has been conducting studies into how its photo-sharing app affects its millions of young users. Repeatedly, the company’s researchers found that Instagram is harmful for a sizable percentage of them, most notably teenage girls.

“We make body image issues worse for one in three teen girls,” said one slide from 2019, summarizing research about teen girls who experience the issues.

“Teens blame Instagram for increases in the rate of anxiety and depression,” said another slide. “This reaction was unprompted and consistent across all groups.”

….

In public, Facebook has consistently played down the app’s negative effects on teens, and hasn’t made its research public or available to academics or lawmakers who have asked for it.

“The research that we’ve seen is that using social apps to connect with other people can have positive mental-health benefits,” CEO Mark Zuckerberg said at a congressional hearing in March 2021 when asked about children and mental health.

In May, Instagram head Adam Mosseri told reporters that research he had seen suggests the app’s effects on teen well-being is likely “quite small.”

POLITICO has some great reporting on the more political angles of these papers (bolding mine).

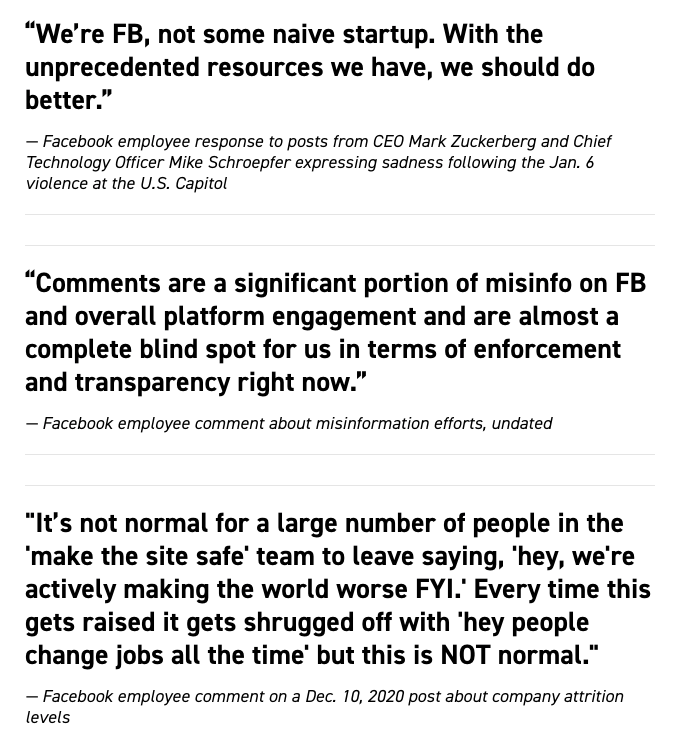

“How are we expected to ignore when leadership overrides research based policy decisions to better serve people like the groups inciting violence today,” one employee asked on a Jan. 6 message board, responding to memos from CEO Mark Zuckerberg and CTO Mike Schroepfer. “Rank and file workers have done their part to identify changes to improve our platform but have been actively held back.”

Gilad Edelman of WIRED wrote of Facebook’s frazzled researchers (bolding mine):

Facebook is a big company. Not every internal research finding or employee suggestion is worth listening to. Still, the frustration expressed in the leaked files strongly suggests that Facebook’s leaders have been erring too heavily in the opposite direction.

The release of these documents has obviously created a massive headache for the company. But it also reveals that Facebook, to its credit, employs some very thoughtful people with good ideas. The company should consider listening to them more.

Researchers within Facebook are very aware of the issues their platform both reveals and perpetuates. But it seems as though their findings are often squelched at the top levels of the company.

3) Facebook is not in control of its own platforms.

I think the very least we can ask of social media platforms, whoever they are and whatever influence they have, is for them to be in control of their own platforms. Facebook, the most dominant force of social media around the world, may not have control of its platform. It’s like those horror/sci-fi movies in which the scientist creates a specimen or invents a robot that goes rogue and escapes the lab.

Here are some quotes from employees within Facebook from the last year (via POLITICOs’ mining of the Facebook Papers):

Facebook employees below the C-suite level recognize that they are not in control of their platform. Here’s a chronicling of how things went around the Jan. 6 insurrection from POLITICO:

On the day of the Capitol riot, employees began pulling levers to try to stave off the peril.

The Elections Operations Center — effectively a war room of moderators, data scientists, product managers and engineers that monitors evolving situations — quickly started turning on what they call “break the glass” safeguards from the 2020 election that dealt more generally with hate speech and graphic violence but which Facebook had rolled back after Election Day.

Sometime late on Jan. 5 or early Jan. 6, engineers and others on the team also readied “misinfo pipelines,” tools that would help them see what was being said across the platform and get ahead of the spread of misleading narratives — like one that Antifa was responsible for the riot, or another that then-President Donald Trump had invoked the Insurrection Act to stay in power. Shortly after, on Jan. 6, they built another pipeline to sweep the site for praise and support of “storm the Capitol” events, a post-mortem published in February shows.

But they faced delays in getting needed approvals to carry out their work. They struggled with “major” technical issues. And above all, without set guidance on how to address the surging delegitimization material they were seeing, there were misses and inconsistencies in the content moderation, according to the post-mortem document — an issue that members of Congress, and Facebook’s independent oversight board, have long complained about.

I could list a few more examples of how Facebook really isn’t in control of their platform, but those examples really flow into the next point, so we should just look at them there.

4) The global South is much more endangered by Facebook than the West is.

I have to say that this was probably the most jarring part of the Facebook Papers for me. It’s really easy to sit here in America or someplace in the West and think, “Facebook is bad because it promotes content that misleads people and makes people generally feel worse.” And while that’s all verifiable and sad, it’s nothing compared to the gore and hate Facebook is hosting around the world in countries that are primarily not white. As Ellen Cushing wrote in her tremendous article for The Atlantic, “These documents show that the Facebook we have in the United States is actually the platform at its best.” She continues (bolding mine):

According to the documents, Facebook is aware that its products are being used to facilitate hate speech in the Middle East, violent cartels in Mexico, ethnic cleansing in Ethiopia, extremist anti-Muslim rhetoric in India, and sex trafficking in Dubai. It is also aware that its efforts to combat these things are insufficient. A March 2021 report notes, “We frequently observe highly coordinated, intentional activity … by problematic actors” that is “particularly prevalent—and problematic—in At-Risk Countries and Contexts”; the report later acknowledges, “Current mitigation strategies are not enough.”

In some cases, employees have successfully taken steps to address these problems, but in many others, the company response has been slow and incomplete. As recently as late 2020, an internal Facebook report found that only 6 percent of Arabic-language hate content on Instagram was detected by Facebook’s systems. Another report that circulated last winter found that, of material posted in Afghanistan that was classified as hate speech within a 30-day range, only 0.23 percent was taken down automatically by Facebook’s tools. In both instances, employees blamed company leadership for insufficient investment.

In many of the world’s most fragile nations, a company worth hundreds of billions of dollars hasn’t invested enough in the language- and dialect-specific artificial intelligence and staffing it needs to address these problems. Indeed, last year, according to the documents, only 13 percent of Facebook’s misinformation-moderation staff hours were devoted to the non-U.S. countries in which it operates, whose populations comprise more than 90 percent of Facebook’s users. (Facebook declined to tell me how many countries it has users in.) And although Facebook users post in at least 160 languages, the company has built robust AI detection in only a fraction of those languages, the ones spoken in large, high-profile markets such as the U.S. and Europe—a choice, the documents show, that means problematic content is seldom detected.

The Associated Press reports of a Facebook employee who wanted to test its content moderation efforts in India. They write (bolding mine):

In the note, titled “An Indian Test User’s Descent into a Sea of Polarizing, Nationalistic Messages,” the employee whose name is redacted said they were “shocked” by the content flooding the news feed which “has become a near constant barrage of polarizing nationalist content, misinformation, and violence and gore.”

Seemingly benign and innocuous groups recommended by Facebook quickly morphed into something else altogether, where hate speech, unverified rumors and viral content ran rampant.

The recommended groups were inundated with fake news, anti-Pakistan rhetoric and Islamophobic content. Much of the content was extremely graphic.

One included a man holding the bloodied head of another man covered in a Pakistani flag, with an Indian flag in the place of his head. Its “Popular Across Facebook” feature showed a slew of unverified content related to the retaliatory Indian strikes into Pakistan after the bombings, including an image of a napalm bomb from a video game clip debunked by one of Facebook’s fact-check partners.

“Following this test user’s News Feed, I’ve seen more images of dead people in the past three weeks than I’ve seen in my entire life total,” the researcher wrote.

This is India, a country of 1.3 billion people! Facebook even has employees on the ground in India. What about Dubai where Facebook is used for human trafficking? What about Ethiopia where Facebook is used to stoke violence? Facebook cannot control its own platform and it isn’t Americans who face the brunt of that problem—it’s people who are largely non-white and non-English-speaking.

I won’t quote a bunch more articles here, but here are some for you if you’d like to keep reading:

Facebook Services Are Used to Spread Religious Hatred in India, Internal Documents Show

Facebook has known it has a human trafficking problem for years. It still hasn't fully fixed it

Facebook knew about, failed to police, abusive content globally

This line from WIRED pretty well sums of Facebook’s indefensible neglect of the global South: “Arabic is the third-most spoken language among Facebook users, yet an internal report notes that, at least as of 2020, the company didn’t even employ content reviewers fluent in some of its major dialects.”

5) Facebook is hemorrhaging young people, and it terrifies them.

The best news for Facebook to come out of this massive document dump is that one could argue that the FCC’s monopoly case against Facebook is dead on arrival. Why? The Facebook Papers reveal that the company (including Instagram) is losing young people left and right, and that TikTok and Snapchat are pillaging their younger users.

Earlier this year, a researcher at Facebook shared some alarming statistics with colleagues.

Teenage users of the Facebook app in the US had declined by 13 percent since 2019 and were projected to drop 45 percent over the next two years, driving an overall decline in daily users in the company’s most lucrative ad market. Young adults between the ages of 20 and 30 were expected to decline by 4 percent during the same timeframe. Making matters worse, the younger a user was, the less on average they regularly engaged with the app. The message was clear: Facebook was losing traction with younger generations fast.

The “aging up issue is real,” the researcher wrote in an internal memo. They predicted that, if “increasingly fewer teens are choosing Facebook as they grow older,” the company would face a more “severe” decline in young users than it already projected.

Facebook is losing young people. If you’ve ever wondered what would have happened if Myspace flailed and tried to survive as Facebook seized its youthful lifeblood from its site, we may be watching it happen with Facebook as its life is leeched by other platforms. I don’t think Facebook is going away anytime soon, but they know the situation is dire because they know this: when a social media company loses young people, its days are numbered.

Why Should Christians Care?

Last week I joined Morgan Lee and Ted Olsen on Christianity Today’s Quick to Listen podcast to discuss all of this, long before I sat down to write this piece over the weekend. So if you’re wanting to listen to more discussion, you can find the podcast wherever you listen to such things, or here on the CT website.

But in our discussion, we eventually got to the point of why Christians should even care about all of this stuff. I think this is a good question to ask because, after all, we can’t care about everything. Why should we care about all of these Facebook revelations?

I will write more on the Christian applications of all of this in the coming weeks, but this is my best summary of it as a conclusion to this marathon email:

Facebook is the most pervasive social media platform in the world—collectively, it shapes how we think, believe, and act like no other medium.

Because it plays such a central role in our understanding of ourselves, our world, and other people, we should care about how it affects us as we try to live out our calling to love God and love others.

Facebook makes more money when people spend more time on its platforms, and people spend more time on its platforms when they’re mad, fighting, and generally upset—Facebook’s own data shows that this is true, and they shelved it.

The factors that have to be in place for Facebook to flourish (that is, make money) are in opposition to the flourishing of its users. It is not a neutral platform or “tool”—in fact, there is no such thing in the realm of the social internet.

When Facebook flourishes its users flounder.

As followers of Jesus who want others to come to know him and flourish, it should bother us that the most prevalent medium of communication across the world is fueled by content that is in opposition to human flourishing. It should lead us to call into question how we use the platform and, frankly, whether we should use the platform at all.

Next week, we’ll dive more deeply into the flawed logic that “Social media is just like the printing press,” and “Facebook is a neutral tool.”

I am also writing a book right now, and this newsletter is clocking in around 4,300 words, which is basically a book chapter. So, sorry Drew that I paused writing a chapter in the book to write this, but I’m ahead of schedule on the manuscript, so we good. :-)

Or 2018, reports differ on when this actually went into effect.

This is my paraphrase. I have heard him say this in various places, but couldn’t find it anywhere in print.