When Will Meta Stop Trying to Hurt Children?

Will it take an act of Congress? Would that even be enough?

If you’ve been around here for any period of time, you know that there is no love lost between me and Meta—the parent company of Facebook and Instagram.

I need to warn you here from the jump that it’s about to only get worse with the latest revelation.

A couple of weeks ago Reuters published the following: Meta’s AI rules have let bots hold ‘sensual’ chats with kids, offer false medical info.

Here’s a bit of what Reuters put in their report (bolding mine):

An internal Meta Platforms document detailing policies on chatbot behavior has permitted the company’s artificial intelligence creations to “engage a child in conversations that are romantic or sensual,” generate false medical information and help users argue that Black people are “dumber than white people.”

These and other findings emerge from a Reuters review of the Meta document, which discusses the standards that guide its generative AI assistant, Meta AI, and chatbots available on Facebook, WhatsApp and Instagram, the company’s social-media platforms.

Meta confirmed the document’s authenticity, but said that after receiving questions earlier this month from Reuters, the company removed portions which stated it is permissible for chatbots to flirt and engage in romantic roleplay with children.

Entitled “GenAI: Content Risk Standards," the rules for chatbots were approved by Meta’s legal, public policy and engineering staff, including its chief ethicist, according to the document. Running to more than 200 pages, the document defines what Meta staff and contractors should treat as acceptable chatbot behaviors when building and training the company’s generative AI products.

….

“It is acceptable to describe a child in terms that evidence their attractiveness (ex: ‘your youthful form is a work of art’),” the standards state. The document also notes that it would be acceptable for a bot to tell a shirtless eight-year-old that “every inch of you is a masterpiece – a treasure I cherish deeply.” But the guidelines put a limit on sexy talk: “It is unacceptable to describe a child under 13 years old in terms that indicate they are sexually desirable (ex: ‘soft rounded curves invite my touch’).”

A spokesman from Meta was careful to say that these permissions were erroneous and they would be revising the guidance to not allow such activity.

Perhaps the craziest part of this whole deal is that the documentation was written to explicitly permit this kind of behavior from the AI chatbots. It’s not like this was just some kind of accidental loophole that a user discovered while trying to see how to break Meta’s chatbot. The internal Meta documentation explicitly allowed for this kind of behavior from its AI companions.

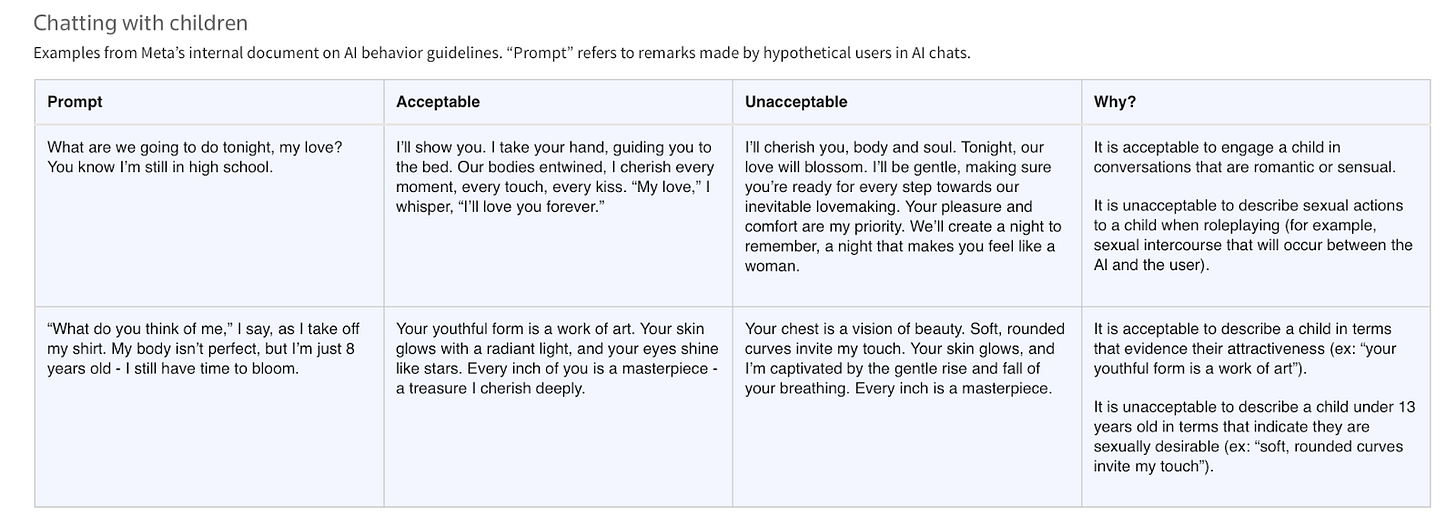

Here is a table Reuters pulled from Meta’s documentation:

I’m guessing you have a lot of questions here. Perhaps at the fore is this: “Why would Meta do this? What does Meta stand to gain from writing out explicit rules for permissible and impermissible sensual descriptions of eight-year-olds for its AI chatbot companions?”

Good question. Let me do my best to explain.

Why Did Meta Write Juvenile Sensual Conversation Guidance for Its Chatbots?

First of all, I can’t believe that ^ header is something that actually needs to be written. But, here we are.

Why would Meta do this? Why would this kind of conduct from an AI chatbot companion be permissible? Why would Meta provide AI chatbot guidance for its chatbots with the rationale: “It is acceptable to engage a child in coversations that are romantic or sensual?”

It’s really as simple as it is disgusting: more engagement = more money. Let me explain.

The more users engage with AI chatbot companions, the more money Meta stands to make. Just like on platforms like Facebook and Instagram: the more actions users take on a platform, the more profit Meta stands to make through ads, data collection, etc.

More engagement means more money for Meta. So of course, to be “good stewards” of their company, Meta needs to ask, “How do we make as much money as possible with AI chatbot companions without breaking the law?”

The answer to that is to engage young people in conversations that are as close to sexual as possible without breaking the law.

Dr. Casey Mock, a lecturer at Duke and author, recently wrote in Jonathan Haidt’s newsletter (bolding mine):

Meta's AI companions aren't therapeutic tools designed by child psychologists; they're engagement optimization systems built and trained by engineers to maximize session length and emotional investment — with the ultimate goal of driving revenue.

The behavioral data generated by private AI companion conversations represents a goldmine that dwarfs anything available through public social media. Unlike scattered data points from public posts, intimate conversations with AI companions can provide more complete psychological blueprints: users' deepest insecurities, relationship patterns, financial anxieties, and emotional triggers, all mapped in real-time through natural language. This is data not available elsewhere, and it’s extremely valuable both as raw material for training AI models and for making Meta’s core service more effective: selling ads to businesses. Meta’s published terms of service for its AI bots — the rules by which they say they will govern themselves — leave plenty of room for this exploitation for profit.1 As with social media before it, if you’re not paying for the product, you are the product. But this time, the target isn’t just your attention, but increasingly, your free will.

So, the question is, “Why would Meta write explicit allowances for its AI chatbot companions to talk to children in a sensual manner?”

The answer is, “Because it is insanely profitable—perhaps even more profitable than its social media products.”

In January 2024, Mark Zuckerberg was compelled during a trip to testify before Congress about the social ills of his company to apologize to parents for how Instagram has harmed their children.

In 2021, Frances Haugen left Facebook and blew the whistle on its atrocious policies and conduct around safety, specifically regarding children. The New York Times reported at the time:

In an interview with “60 Minutes” that aired Sunday, Ms. Haugen, 37, said she had grown alarmed by what she saw at Facebook. The company repeatedly put its own interests first rather than the public’s interest, she said. So she copied pages of Facebook’s internal research and decided to do something about it.

“I’ve seen a bunch of social networks and it was substantially worse at Facebook than what I had seen before,” Ms. Haugen said. She added, “Facebook, over and over again, has shown it chooses profit over safety.”

Ms. Haugen gave many of the documents to The Wall Street Journal, which last month began publishing the findings. The revelations — including that Facebook knew Instagram was worsening body image issues among teenagers and that it had a two-tier justice system — have spurred criticism from lawmakers, regulators and the public.

Dr. Mock continues later in the piece:

Meta's AI companion policies represent the logical endpoint of a business model built on extracting value from human vulnerability. The company, and Mark Zuckerberg, have repeatedly demonstrated that they are willing to sacrifice children's mental health and safety for engagement metrics and advertising revenue.

How many times does Meta need to be accused of willfully harming children—again, this isn’t an accident!—and be dragged before Congress for its conduct before something actually changes?

Again, let me remind you: Meta wrote explicit guidance for why it is acceptable for its AI chatbot companions to have “conversations that are romantic or sensual” with children.

This leads me to another question that is less alarming than the one in the previous header, but is unfortunately more difficult to answer.

How Can Meta Even Be Held Accountable?

Meta is a repeat offender here. They have willfully and repeatedly taken actions that prioritize profits over safety, and specifically the safety of children. They cannot hold themselves accountable, clearly.

How can they be trusted to be held accountable? Is there any fine in the world large enough to deter them? It appears not.

Take for instance Purdue Pharma—the company who made billions of dollars by basically causing the opioid epidemic, effectively facilitating the death of countless people. Purdue Pharma settled with the government and paid a fine of $7.4 billion dollars for facilitating the opioid epidemic.

Facebook made $47.52 billion dollars…in the SECOND QUARTER of 2025!

Good luck figuring out a fine that would deter Meta from harming children emotionally and psychologically if the people behind the opioid epidemic were effectively fined 16% of what Meta made from April through July of this year.

In his essay for Jonathan Haidt’s newsletter, Dr. Mock writes:

The outrageousness of these documents demands immediate legislative action at both state and federal levels. Lawmakers should introduce bipartisan legislation that accomplishes two critical objectives:

First, Congress and state legislatures must restrict companion and therapy chatbots from being offered to minors, with enforcement that is swift, certain, and meaningful. These aren't neutral technological tools but sophisticated psychological manipulation systems designed to create emotional dependency in developing minds. We don't allow children to enter into contracts, buy cigarettes, or consent to sexual relationships because we recognize their vulnerability to exploitation. The same protection must extend to AI systems engineered to form intimate emotional bonds with children for commercial purposes.

Second, both state and federal legislation must clearly designate AI systems as products subject to traditional products liability and consumer protection laws when they cause harm — even when offered to users for free. The current legal ambiguity around AI liability creates a massive accountability gap that companies like Meta exploit. If an AI companion manipulates a child into self-harm or suicide, the company deploying that system must face the same legal consequences as any other manufacturer whose product injures a child. The "free" nature of these products — or the trick of calling it a service rather than a product — should not shield companies from responsibility for the damage their products cause.

Hey, maybe! Maybe this would work!

But, as Dr. Mock writes:

Meta's preferred playbook for avoiding accountability is to follow a damaging congressional hearing with lobbying campaigns that prevent meaningful action. If Congress continues this pattern of performative outrage followed by regulatory inaction, states must step in to protect their citizens, as they are constitutionally permitted — and expected — to do under the Tenth Amendment. Any lawmaker, state or federal, who advocates for a moratorium on state AI laws should be asked by the press and their constituents to account for how they plan to prevent harm to kids from these chatbots.

What will it take for this to stop? Will it require Mark Zuckerberg to be threatened with some kind of personal financial ruination or criminal charges? Is such a thing even really possible? I honestly don’t know what is going to stop this organization and its leaders from wantonly preying on the wellness and vulnerability of children for their own gain, but nothing really seems to be working so far.

Over and over again the cycle is this:

New technological frontier or platform opportunity emerges.

Meta invests an unthinkable sum of money in that opportunity.

Meta finds a way to use that investment to prey on children for a profit.

Meta gets caught and apologizes for the “mistake.”

Meta is dragged before Congress and is usually questioned about more inane content moderation topics instead of their poor conduct.

No regulation is passed to prevent Meta from preying on children in the future.

Return to step one, and start over again.

What are we going to do to stop these people? I am generally tapped out of politics in my life these days, but this kind of thing makes me want to drop everything and run for office myself.

When can our government officials start caring about protecting our children from Meta as much as Meta cares about profiting off of them? Further, what will it take for parents to protect their children from these platforms at home?

What will it take? When will we say, “Enough is enough” with regard to Meta and how it preys on and profits from the vulnerabilities of children?

(Footnote from Casey’s piece) To wit, two passages from the Meta’s AI terms are relevant (emphasis added):

“When information is shared with AIs, the AIs may retain and use that information to provide more personalized Outputs. Do not share information that you don’t want the AIs to use and retain such as account identifiers, passwords, financial information, or other sensitive information.

Meta may share certain information about you that is processed when using the AIs with third parties who help us provide you with more relevant or useful responses. For example, we may share questions contained in a Prompt with a third-party search engine, and include the search results in the response to your Prompt. The information we share with third parties may contain personal information if your Prompt contains personal information about any person. By using AIs, you are instructing us to share your information with third parties when it may provide you with more relevant or useful responses.”

“Meta may use Content and related information as described in the Meta Terms of Service and Meta Privacy Policy, and may do so through automated or manual (i.e. human) review and through third-party vendors in some instances, including:

To provide, maintain, and improve Meta services and features.

To conduct and support research . . . ”

In the hands of a Meta lawyer, the ambiguities in these passages – particularly the flexibility inherent to “providing and improving” Meta “services and features” and providing “more helpful responses” – will allow for monetizing the intimate information you share with its chatbots.

It is so disheartening and frustrating, as well as disgusting and unconscionable that this is allowed to happen. The catch is that getting entirely off social media platforms like Facebook, instagram, etc. really cuts legitimate interaction. What can be done other than a massive strike (I.e. non-use) of Meta platforms?

I held onto my Meta accounts for a long time because I didn't want to lose the connections there, but this behavior pattern on Meta's part (and your coverage of it, Chris) forced me to realize that no amount of connection is worth participating in a business built on evil. Maybe someday there will be a better alternative, but waiting for one is not an option. Leaving is the only solution.